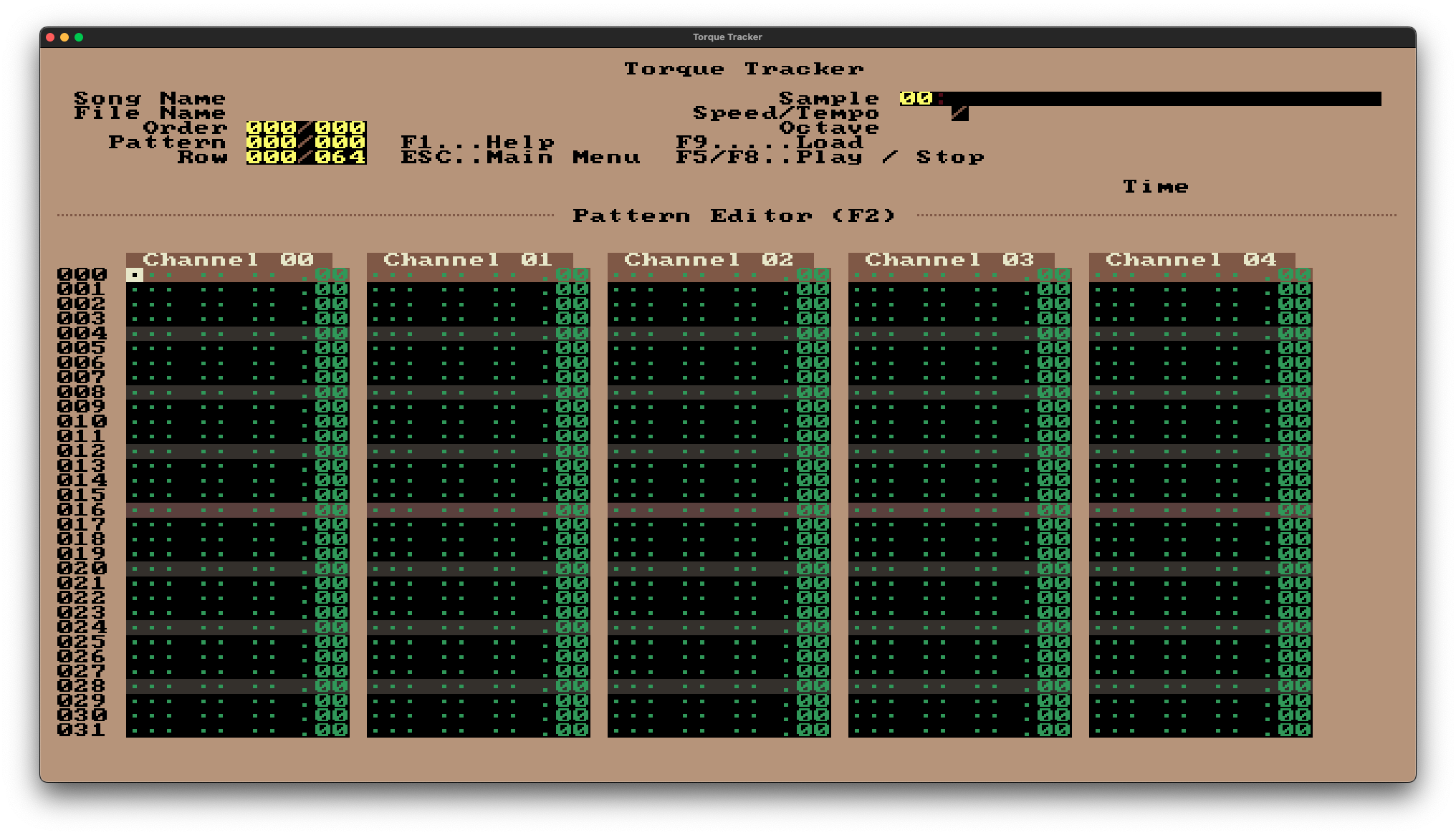

Announcing Torque Tracker #

Torque Tracker is a WIP reimplementation of Schism Tracker, which is a reimplementation of Impulse Tracker. Trackers are an early form of music creation software. In recent years a couple of hardware trackers were released, which is were i learned of them. Torque Tracker is the result of me really liking the keyboard driven workflow of Schism Tracker and wanting a project to work on. It has reached a state where the basic features work, so i wanted to publish it.

How to use / try it #

It is a keyboard based-UI, so navigation is mostly done via arrow keys, shift and shift-tab also work sometimes. You can go to different pages by pressing the F-keys or using the main menu, which opens when pressing escape. In there a lot isn't implemented yet.

- You can use the sample page on F3 to load one or more samples. (press enter)

- You can go to the pattern page (F2) and enter a pattern.

- While in the first (the note) column the keyboad is turned into a midi-keyboard (assumes QWERTY layout, sorry dvorak people). The second column is used to control which sample should be played. Using the + and - keys you can change the pattern you are editing.

- With the F11 page you can order the patterns and change the volume and panning of the channels (press F11 two times for volume).

- You can use the F12 page to change the tempo of the song (using both tempo and speed slider).

- You can play the currently selected pattern by pressing F6 and the whole song by pressing F5. Playing the whole song means following the patterns in the order you have arranged in the F11 page, so if you haven't set anything there F5 won't work.

- You can stop playback by pressing F8.

This is all original schismtracker workflow, so don't complain to me if you don't like it :3

If you run into any

wgpuissue you can try disabling the default features and enabling thesoft_scalingfeature.

What i want to add #

- Project loading and saving (loading works partially)

- Effects (as in special behaviour you can attach to a note event)

- Settings (most importantly audio settings)

- Mouse Support

- More UI Pages / Detail

Especially the effects will require a lot of work simply because there are so many of them. They also can do a lot of different stuff (one skips to a different place in the song) so i need to find a pretty flexible API for them.

I also want to try adding some accessability features via Accesskit. I think it should be relatively easy to get something

good there, because of the static UI.

Technical Stuff #

Both the UI and the sound engine are completely custom. For the audio part this was because the rust audio ecosystem is pretty small and i didn't find audio rendering library that was both flexible enough and even close to being complete. The UI ecosystem looks a lot better, but i don't think that the keyboard driven, static UI would have been possible in any of the rust UI libraries (not that i know any non-rust UI library that would allow this).

UI #

The UI is based on a 640x400 pixel buffer that gets scaled to fit the current window size. Every UI element has a static, hardcoded position, so i have no layout algorithm at all. Text is a 8x8 bitmap font and every UI element is placed on a character position, like in a terminal. Navigation between UI elements is also hardcoded.

The Program uses a total of 16 colors. That allows me to only write the index of a color when drawing the UI. The colors are

added when scaling it up. If you have wgpu enabled (default) both scaling and color replacement are done completely on the

GPU.

Communication with the audio backend is only done with spinlocks, as the audio thread can't notify the UI thread without

it not being realtime safe anymore. But i know that the spinlocks are only ever held while one audio buffer is being rendered,

so i don't really spin on them. Instead i sleep for the duration of the audio buffer (which depends on the audio settings).

For this spin sleeping i use smol as having multiple threads that mostly sleep, sounds like a great usecase of async to me.

Audio #

Audio processing currently only does resampling at a different sample rate, volume and panning. Resampling is at the core of it all, as with this you can also do pitch shifting, by playing the sound faster or slower. (If you have a vinyl player you can try this out)

The audio rendering currently is an Iterator that produces audio frames. Maybe i will switch that to a buffer

based rendering at some point, as i have heard that buffer based rendering is faster. This isn't an issue yet,

but when the audio processing gets more features it may become one.

Rendering audio while the song that should be rendered can change at any moment is a complicated problem. The audio

thread has to be able to get access to the current song at any time, without ever blocking while the UI thread has to

be able to edit it sometimes.

I wrote my own concurrent data structure for this, simple-left-right. It stores

two copies of the data and switches which one reader and writer have access to. It keeps the copies in sync by only allowing

modifications by applying an Operation that can be cloned, stored and applied to the other one later.